Hello everyone!

A quick post to introduce Gladys Assistant v4.25.1, released last Friday and which should already be deployed on your instances ![]()

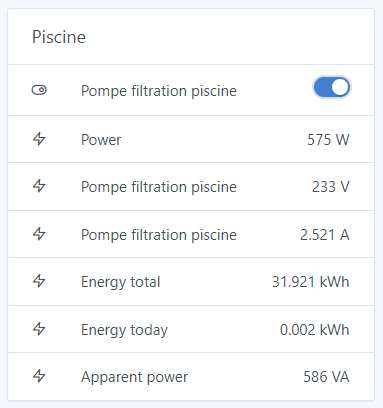

Tasmota improvements around consumption tracking

There were several unit issues with the Tasmota integration on the consumption tracking features, and this is now fixed in Gladys:

Thanks @Terdious for the PR, and @GBoulvin for the testing ![]()

Fix for a camera-related bug (ffmpeg)

For some users, when their camera didn’t respond well to Gladys requests, the ffmpeg processes remained active and accumulated on the user’s system, consuming RAM unnecessarily.

This update limits requests to fetch a camera image to 10 seconds.

Beyond that, the process is terminated to avoid saturating RAM.

Thanks to @lmilcent for reporting the bug ![]()

Fix for a bug in the scene editor interface

The « Execute only when the threshold is passed » button was no longer clickable.

This is now fixed.

Fix for a bug in the new « Devices » widget

The new « Devices » widget was introduced in the latest Gladys release, and there was a bug with roller shutters that could not be controlled.

This is now fixed in this version.

Improvement to m² units

All units with an exponent now show the exponent and not just a « 2 »

The full changelog is available here .

How to update?

If you installed Gladys with the official Raspberry Pi OS image, your instances will update automatically in the coming hours. This can take up to 24 hours — don’t worry.

If you installed Gladys with Docker, make sure you are using Watchtower (See the documentation )