J’étais un peu sur les nerfs quand toute l’installation zigbee a sauté ce matin.

Le container zigbee2mqtt ne démarrait plus.

La solution est ici : Docker | Zigbee2MQTT

Salut,

merci pour le lien car je me galère depuis ce matin aussi…

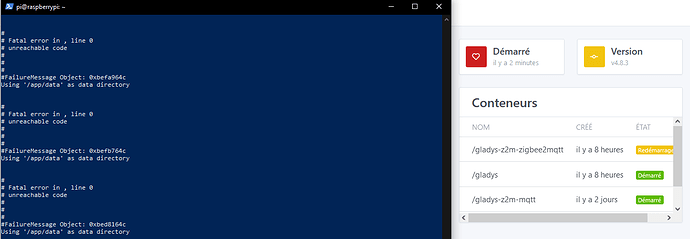

Par contre je n’ai pas réussis même avec les explications ![]()

Depuis que j’ai rebooté, Gladys ne se lance plus non plus…

docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

37873496b294 koenkk/zigbee2mqtt:latest "docker-entrypoint.s…" 6 hours ago Up 6 minutes gladys-z2m-zigbee2mqtt

e73406a91e11 containrrr/watchtower "/watchtower --clean…" 3 weeks ago Up 6 minutes 8080/tcp watchtower

docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

koenkk/zigbee2mqtt latest 1eeef661895c 9 hours ago 138MB

eclipse-mosquitto 2 6483687c39a6 3 days ago 9.89MB

gladysassistant/gladys v4 fa56e4f53578 4 days ago 482MB

containrrr/watchtower latest 72324c978d08 2 months ago 14.5MB

gladysassistant/gladys-setup-in-progress latest cc0d3cac5829 12 months ago 16.4MB

De pire en pire, maintenant docker veut même plus se lancer :

sudo systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Fri 2022-04-01 17:52:59 CEST; 7min ago

Docs: https://docs.docker.com

Process: 897 ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock (code=exited, status=2)

Main PID: 897 (code=exited, status=2)

avril 01 17:52:59 raspberrypi systemd[1]: docker.service: Service RestartSec=2s expired, scheduling restart.

avril 01 17:52:59 raspberrypi systemd[1]: docker.service: Scheduled restart job, restart counter is at 3.

avril 01 17:52:59 raspberrypi systemd[1]: Stopped Docker Application Container Engine.

avril 01 17:52:59 raspberrypi systemd[1]: docker.service: Start request repeated too quickly.

avril 01 17:52:59 raspberrypi systemd[1]: docker.service: Failed with result 'exit-code'.

avril 01 17:52:59 raspberrypi systemd[1]: Failed to start Docker Application Container Engine.

sudo dockerd --debug

INFO[2022-04-01T18:00:35.510707183+02:00] Starting up

DEBU[2022-04-01T18:00:35.513247742+02:00] Listener created for HTTP on unix (/var/run/docker.sock)

DEBU[2022-04-01T18:00:35.515060220+02:00] Golang's threads limit set to 109080

INFO[2022-04-01T18:00:35.515985218+02:00] parsed scheme: "unix" module=grpc

INFO[2022-04-01T18:00:35.516057382+02:00] scheme "unix" not registered, fallback to default scheme module=grpc

INFO[2022-04-01T18:00:35.516156822+02:00] ccResolverWrapper: sending update to cc: {[{unix:///run/containerd/containerd.sock <nil> 0 <nil>}] <nil> <nil>} module=grpc

INFO[2022-04-01T18:00:35.516220949+02:00] ClientConn switching balancer to "pick_first" module=grpc

DEBU[2022-04-01T18:00:35.516948640+02:00] metrics API listening on /var/run/docker/metrics.sock

INFO[2022-04-01T18:00:35.520234760+02:00] parsed scheme: "unix" module=grpc

INFO[2022-04-01T18:00:35.520309905+02:00] scheme "unix" not registered, fallback to default scheme module=grpc

INFO[2022-04-01T18:00:35.520399531+02:00] ccResolverWrapper: sending update to cc: {[{unix:///run/containerd/containerd.sock <nil> 0 <nil>}] <nil> <nil>} module=grpc

INFO[2022-04-01T18:00:35.520446640+02:00] ClientConn switching balancer to "pick_first" module=grpc

DEBU[2022-04-01T18:00:35.523514102+02:00] Using default logging driver json-file

DEBU[2022-04-01T18:00:35.523791312+02:00] processing event stream module=libcontainerd namespace=plugins.moby

DEBU[2022-04-01T18:00:35.524027562+02:00] [graphdriver] priority list: [btrfs zfs overlay2 fuse-overlayfs aufs overlay devicemapper vfs]

DEBU[2022-04-01T18:00:35.536603138+02:00] backingFs=extfs, projectQuotaSupported=false, indexOff="index=off,", userxattr="" storage-driver=overlay2

INFO[2022-04-01T18:00:35.536677487+02:00] [graphdriver] using prior storage driver: overlay2

DEBU[2022-04-01T18:00:35.536745132+02:00] Initialized graph driver overlay2

DEBU[2022-04-01T18:00:35.554617373+02:00] No quota support for local volumes in /var/lib/docker/volumes: Filesystem does not support, or has not enabled quotas

WARN[2022-04-01T18:00:35.559038574+02:00] Your kernel does not support cgroup memory limit

WARN[2022-04-01T18:00:35.559155236+02:00] Your kernel does not support CPU realtime scheduler

WARN[2022-04-01T18:00:35.559215752+02:00] Your kernel does not support cgroup blkio weight

WARN[2022-04-01T18:00:35.559299470+02:00] Your kernel does not support cgroup blkio weight_device

DEBU[2022-04-01T18:00:35.559951535+02:00] Max Concurrent Downloads: 3

DEBU[2022-04-01T18:00:35.560024958+02:00] Max Concurrent Uploads: 5

DEBU[2022-04-01T18:00:35.560086493+02:00] Max Download Attempts: 5

INFO[2022-04-01T18:00:35.560355611+02:00] Loading containers: start.

DEBU[2022-04-01T18:00:35.560541381+02:00] processing event stream module=libcontainerd namespace=moby

ERRO[2022-04-01T18:00:35.560908217+02:00] failed to load container container=dc527c5a16c49c77aab3ce60537ee459201e346a8aec83a5879f005fda7a043f error="open /var/lib/docker/containers/dc527c5a16c49c77aab3ce60537ee459201e346a8aec83a5879f005fda7a043f/config.v2.json: no such file or directory"

DEBU[2022-04-01T18:00:35.563962013+02:00] loaded container container=37873496b29494b087d90a893ff2b74b52ac205a3270b3283618876b48a9a684 paused=false running=false

DEBU[2022-04-01T18:00:35.563979253+02:00] loaded container container=e73406a91e113b8e910ac2b83acb72a4fafcb04531d9d73d3ed8182ed123f6e9 paused=false running=false

ERRO[2022-04-01T18:00:35.564507231+02:00] failed to load container container=792375d9bc456d36fdebef0a18e0a8cf4a32c99e52ccd19a9d09a045b9af3b5c error="Container dc527c5a16c49c77aab3ce60537ee459201e346a8aec83a5879f005fda7a043f is stored at 792375d9bc456d36fdebef0a18e0a8cf4a32c99e52ccd19a9d09a045b9af3b5c"

DEBU[2022-04-01T18:00:35.584675041+02:00] restoring container container=e73406a91e113b8e910ac2b83acb72a4fafcb04531d9d73d3ed8182ed123f6e9 paused=false restarting=false running=false

DEBU[2022-04-01T18:00:35.585184315+02:00] restoring container container=37873496b29494b087d90a893ff2b74b52ac205a3270b3283618876b48a9a684 paused=false restarting=false running=false

DEBU[2022-04-01T18:00:35.586889854+02:00] alive: false container=37873496b29494b087d90a893ff2b74b52ac205a3270b3283618876b48a9a684 paused=false restarting=false running=false

DEBU[2022-04-01T18:00:35.586993349+02:00] done restoring container container=37873496b29494b087d90a893ff2b74b52ac205a3270b3283618876b48a9a684 paused=false restarting=false running=false

DEBU[2022-04-01T18:00:35.587717819+02:00] restored container alive=false container=e73406a91e113b8e910ac2b83acb72a4fafcb04531d9d73d3ed8182ed123f6e9 module=libcontainerd namespace=moby pid=0

DEBU[2022-04-01T18:00:35.587807796+02:00] alive: false container=e73406a91e113b8e910ac2b83acb72a4fafcb04531d9d73d3ed8182ed123f6e9 paused=false restarting=false running=false

DEBU[2022-04-01T18:00:35.587855831+02:00] cleaning up dead container process container=e73406a91e113b8e910ac2b83acb72a4fafcb04531d9d73d3ed8182ed123f6e9 paused=false restarting=false running=false

DEBU[2022-04-01T18:00:35.587919088+02:00] done restoring container container=e73406a91e113b8e910ac2b83acb72a4fafcb04531d9d73d3ed8182ed123f6e9 paused=false restarting=false running=false

DEBU[2022-04-01T18:00:35.588009714+02:00] Option Experimental: false

DEBU[2022-04-01T18:00:35.588043934+02:00] Option DefaultDriver: bridge

DEBU[2022-04-01T18:00:35.588075266+02:00] Option DefaultNetwork: bridge

DEBU[2022-04-01T18:00:35.588108135+02:00] Network Control Plane MTU: 1500

DEBU[2022-04-01T18:00:35.618439439+02:00] /usr/sbin/iptables, [--wait -t filter -C FORWARD -j DOCKER-ISOLATION]

DEBU[2022-04-01T18:00:35.626656350+02:00] /usr/sbin/iptables, [--wait -t nat -D PREROUTING -m addrtype --dst-type LOCAL -j DOCKER]

DEBU[2022-04-01T18:00:35.635582378+02:00] /usr/sbin/iptables, [--wait -t nat -D OUTPUT -m addrtype --dst-type LOCAL ! --dst 127.0.0.0/8 -j DOCKER]

DEBU[2022-04-01T18:00:35.644694213+02:00] /usr/sbin/iptables, [--wait -t nat -D OUTPUT -m addrtype --dst-type LOCAL -j DOCKER]

DEBU[2022-04-01T18:00:35.653622093+02:00] /usr/sbin/iptables, [--wait -t nat -D PREROUTING]

DEBU[2022-04-01T18:00:35.662176878+02:00] /usr/sbin/iptables, [--wait -t nat -D OUTPUT]

DEBU[2022-04-01T18:00:35.670794586+02:00] /usr/sbin/iptables, [--wait -t nat -F DOCKER]

DEBU[2022-04-01T18:00:35.679101140+02:00] /usr/sbin/iptables, [--wait -t nat -X DOCKER]

DEBU[2022-04-01T18:00:35.687456841+02:00] /usr/sbin/iptables, [--wait -t filter -F DOCKER]

DEBU[2022-04-01T18:00:35.695725082+02:00] /usr/sbin/iptables, [--wait -t filter -X DOCKER]

DEBU[2022-04-01T18:00:35.738584928+02:00] /usr/sbin/iptables, [--wait -t filter -F DOCKER-ISOLATION-STAGE-1]

DEBU[2022-04-01T18:00:35.746841281+02:00] /usr/sbin/iptables, [--wait -t filter -X DOCKER-ISOLATION-STAGE-1]

DEBU[2022-04-01T18:00:35.828486812+02:00] /usr/sbin/iptables, [--wait -t filter -F DOCKER-ISOLATION-STAGE-2]

DEBU[2022-04-01T18:00:35.836826384+02:00] /usr/sbin/iptables, [--wait -t filter -X DOCKER-ISOLATION-STAGE-2]

DEBU[2022-04-01T18:00:35.844812637+02:00] /usr/sbin/iptables, [--wait -t filter -F DOCKER-ISOLATION]

DEBU[2022-04-01T18:00:35.853513416+02:00] /usr/sbin/iptables, [--wait -t filter -X DOCKER-ISOLATION]

DEBU[2022-04-01T18:00:35.861614108+02:00] /usr/sbin/iptables, [--wait -t nat -n -L DOCKER]

DEBU[2022-04-01T18:00:35.870122877+02:00] /usr/sbin/iptables, [--wait -t nat -N DOCKER]

DEBU[2022-04-01T18:00:35.878122092+02:00] /usr/sbin/iptables, [--wait -t filter -n -L DOCKER]

DEBU[2022-04-01T18:00:35.886472478+02:00] /usr/sbin/iptables, [--wait -t filter -n -L DOCKER-ISOLATION-STAGE-1]

DEBU[2022-04-01T18:00:35.895918429+02:00] /usr/sbin/iptables, [--wait -t filter -n -L DOCKER-ISOLATION-STAGE-2]

DEBU[2022-04-01T18:00:35.906098793+02:00] /usr/sbin/iptables, [--wait -t filter -N DOCKER-ISOLATION-STAGE-2]

DEBU[2022-04-01T18:00:35.914238502+02:00] /usr/sbin/iptables, [--wait -t filter -C DOCKER-ISOLATION-STAGE-1 -j RETURN]

DEBU[2022-04-01T18:00:35.922652682+02:00] /usr/sbin/iptables, [--wait -A DOCKER-ISOLATION-STAGE-1 -j RETURN]

DEBU[2022-04-01T18:00:35.931143025+02:00] /usr/sbin/iptables, [--wait -t filter -C DOCKER-ISOLATION-STAGE-2 -j RETURN]

DEBU[2022-04-01T18:00:35.939629794+02:00] /usr/sbin/iptables, [--wait -A DOCKER-ISOLATION-STAGE-2 -j RETURN]

DEBU[2022-04-01T18:00:35.969880768+02:00] /usr/sbin/iptables, [--wait -t nat -C POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE]

DEBU[2022-04-01T18:00:35.978455071+02:00] /usr/sbin/iptables, [--wait -t nat -C DOCKER -i docker0 -j RETURN]

DEBU[2022-04-01T18:00:35.986780291+02:00] /usr/sbin/iptables, [--wait -t nat -I DOCKER -i docker0 -j RETURN]

DEBU[2022-04-01T18:00:35.995347464+02:00] /usr/sbin/iptables, [--wait -D FORWARD -i docker0 -o docker0 -j DROP]

DEBU[2022-04-01T18:00:36.004687715+02:00] /usr/sbin/iptables, [--wait -t filter -C FORWARD -i docker0 -o docker0 -j ACCEPT]

DEBU[2022-04-01T18:00:36.013925527+02:00] /usr/sbin/iptables, [--wait -t filter -C FORWARD -i docker0 ! -o docker0 -j ACCEPT]

DEBU[2022-04-01T18:00:36.023127453+02:00] /usr/sbin/iptables, [--wait -t nat -C PREROUTING -m addrtype --dst-type LOCAL -j DOCKER]

DEBU[2022-04-01T18:00:36.031653058+02:00] /usr/sbin/iptables, [--wait -t nat -A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER]

DEBU[2022-04-01T18:00:36.040473613+02:00] /usr/sbin/iptables, [--wait -t nat -C OUTPUT -m addrtype --dst-type LOCAL -j DOCKER ! --dst 127.0.0.0/8]

DEBU[2022-04-01T18:00:36.049259114+02:00] /usr/sbin/iptables, [--wait -t nat -A OUTPUT -m addrtype --dst-type LOCAL -j DOCKER ! --dst 127.0.0.0/8]

DEBU[2022-04-01T18:00:36.058634183+02:00] /usr/sbin/iptables, [--wait -t filter -C FORWARD -o docker0 -j DOCKER]

DEBU[2022-04-01T18:00:36.067769096+02:00] /usr/sbin/iptables, [--wait -t filter -C FORWARD -o docker0 -j DOCKER]

DEBU[2022-04-01T18:00:36.076917841+02:00] /usr/sbin/iptables, [--wait -t filter -C FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT]

DEBU[2022-04-01T18:00:36.086160341+02:00] /usr/sbin/iptables, [--wait -t filter -C FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT]

DEBU[2022-04-01T18:00:36.095426359+02:00] /usr/sbin/iptables, [--wait -t filter -C FORWARD -j DOCKER-ISOLATION-STAGE-1]

DEBU[2022-04-01T18:00:36.103817284+02:00] /usr/sbin/iptables, [--wait -D FORWARD -j DOCKER-ISOLATION-STAGE-1]

DEBU[2022-04-01T18:00:36.112199747+02:00] /usr/sbin/iptables, [--wait -I FORWARD -j DOCKER-ISOLATION-STAGE-1]

DEBU[2022-04-01T18:00:36.124004825+02:00] /usr/sbin/iptables, [--wait -t filter -C DOCKER-ISOLATION-STAGE-1 -i docker0 ! -o docker0 -j DOCKER-ISOLATION-STAGE-2]

DEBU[2022-04-01T18:00:36.137429631+02:00] /usr/sbin/iptables, [--wait -t filter -I DOCKER-ISOLATION-STAGE-1 -i docker0 ! -o docker0 -j DOCKER-ISOLATION-STAGE-2]

DEBU[2022-04-01T18:00:36.150406049+02:00] /usr/sbin/iptables, [--wait -t filter -C DOCKER-ISOLATION-STAGE-2 -o docker0 -j DROP]

DEBU[2022-04-01T18:00:36.164677875+02:00] /usr/sbin/iptables, [--wait -t filter -I DOCKER-ISOLATION-STAGE-2 -o docker0 -j DROP]

DEBU[2022-04-01T18:00:36.178949794+02:00] Network (44ea9e9) restored

INFO[2022-04-01T18:00:36.473201207+02:00] Removing stale sandbox 4d1f8b40a6f7675ceb351586fb46b5941c0925844cb072ab005b584e6909f1d6 (e73406a91e113b8e910ac2b83acb72a4fafcb04531d9d73d3ed8182ed123f6e9)

panic: page 3 already freed

goroutine 1 [running]:

github.com/docker/docker/vendor/go.etcd.io/bbolt.(*freelist).free(0x4483440, 0x1b09, 0x0, 0xa5412000)

/go/src/github.com/docker/docker/vendor/go.etcd.io/bbolt/freelist.go:175 +0x47c

github.com/docker/docker/vendor/go.etcd.io/bbolt.(*node).spill(0x4483500, 0x49f3670, 0x2)

/go/src/github.com/docker/docker/vendor/go.etcd.io/bbolt/node.go:359 +0x434

github.com/docker/docker/vendor/go.etcd.io/bbolt.(*node).spill(0x4483480, 0x4c90080, 0x4ba7018)

/go/src/github.com/docker/docker/vendor/go.etcd.io/bbolt/node.go:346 +0xa0

github.com/docker/docker/vendor/go.etcd.io/bbolt.(*Bucket).spill(0x4c90060, 0x4c90000, 0x4ba7140)

/go/src/github.com/docker/docker/vendor/go.etcd.io/bbolt/bucket.go:570 +0x370

github.com/docker/docker/vendor/go.etcd.io/bbolt.(*Bucket).spill(0x4532d8c, 0xc089e649, 0x3e97f725)

/go/src/github.com/docker/docker/vendor/go.etcd.io/bbolt/bucket.go:537 +0x2dc

github.com/docker/docker/vendor/go.etcd.io/bbolt.(*Tx).Commit(0x4532d80, 0x0, 0x0)

/go/src/github.com/docker/docker/vendor/go.etcd.io/bbolt/tx.go:160 +0x8c

github.com/docker/docker/vendor/go.etcd.io/bbolt.(*DB).Update(0x4633e00, 0x4ba725c, 0x0, 0x0)

/go/src/github.com/docker/docker/vendor/go.etcd.io/bbolt/db.go:701 +0xf4

github.com/docker/docker/vendor/github.com/docker/libkv/store/boltdb.(*BoltDB).AtomicDelete(0x4bb3440, 0x4c8a4e0, 0x5d, 0x4b85f00, 0x0, 0x0, 0x0)

/go/src/github.com/docker/docker/vendor/github.com/docker/libkv/store/boltdb/boltdb.go:329 +0x11c

github.com/docker/docker/vendor/github.com/docker/libnetwork/datastore.(*datastore).DeleteObjectAtomic(0x448cf00, 0x2712c54, 0x44c5680, 0x0, 0x0)

/go/src/github.com/docker/docker/vendor/github.com/docker/libnetwork/datastore/datastore.go:623 +0x3e4

github.com/docker/docker/vendor/github.com/docker/libnetwork.(*controller).deleteFromStore(0x4737dd0, 0x2712c54, 0x44c5680, 0x0, 0x0)

/go/src/github.com/docker/docker/vendor/github.com/docker/libnetwork/store.go:232 +0xfc

github.com/docker/docker/vendor/github.com/docker/libnetwork.(*sandbox).storeDelete(0x44c1200, 0x0, 0x0)

/go/src/github.com/docker/docker/vendor/github.com/docker/libnetwork/sandbox_store.go:189 +0xc0

github.com/docker/docker/vendor/github.com/docker/libnetwork.(*sandbox).delete(0x44c1200, 0x1, 0x1fc6637, 0x1e)

/go/src/github.com/docker/docker/vendor/github.com/docker/libnetwork/sandbox.go:252 +0x4bc

github.com/docker/docker/vendor/github.com/docker/libnetwork.(*controller).sandboxCleanup(0x4737dd0, 0x0)

/go/src/github.com/docker/docker/vendor/github.com/docker/libnetwork/sandbox_store.go:278 +0xcf8

github.com/docker/docker/vendor/github.com/docker/libnetwork.New(0x4bb3300, 0x9, 0x10, 0x4528990, 0x4bb0ac0, 0x4bb3300, 0x9)

/go/src/github.com/docker/docker/vendor/github.com/docker/libnetwork/controller.go:248 +0x5c0

github.com/docker/docker/daemon.(*Daemon).initNetworkController(0x4542400, 0x4580840, 0x4bb0ac0, 0x46286c0, 0x4542400, 0x495a2a8, 0x4bb0ac0)

/go/src/github.com/docker/docker/daemon/daemon_unix.go:855 +0x70

github.com/docker/docker/daemon.(*Daemon).restore(0x4542400, 0x471fea0, 0x44c6b00)

/go/src/github.com/docker/docker/daemon/daemon.go:490 +0x3b8

github.com/docker/docker/daemon.NewDaemon(0x27033e4, 0x471fea0, 0x4580840, 0x4528990, 0x0, 0x0, 0x0)

/go/src/github.com/docker/docker/daemon/daemon.go:1150 +0x2394

main.(*DaemonCli).start(0x4552d38, 0x45288a0, 0x0, 0x0)

/go/src/github.com/docker/docker/cmd/dockerd/daemon.go:195 +0x634

main.runDaemon(...)

/go/src/github.com/docker/docker/cmd/dockerd/docker_unix.go:13

main.newDaemonCommand.func1(0x45406e0, 0x470f188, 0x0, 0x1, 0x0, 0x0)

/go/src/github.com/docker/docker/cmd/dockerd/docker.go:34 +0x70

github.com/docker/docker/vendor/github.com/spf13/cobra.(*Command).execute(0x45406e0, 0x440e058, 0x1, 0x1, 0x45406e0, 0x440e058)

/go/src/github.com/docker/docker/vendor/github.com/spf13/cobra/command.go:850 +0x358

github.com/docker/docker/vendor/github.com/spf13/cobra.(*Command).ExecuteC(0x45406e0, 0x0, 0x0, 0x7)

/go/src/github.com/docker/docker/vendor/github.com/spf13/cobra/command.go:958 +0x298

github.com/docker/docker/vendor/github.com/spf13/cobra.(*Command).Execute(...)

/go/src/github.com/docker/docker/vendor/github.com/spf13/cobra/command.go:895

main.main()

/go/src/github.com/docker/docker/cmd/dockerd/docker.go:97 +0x19c

Je suis assez perplexe car l’image gladys bullseye je l’ai testé avec mon ancien dongle zigbee et ça fonctionne sans rien faire ![]()

Tout fonctionnait très bien jusqu’à hier…

et ce matin, le lien Gladys - zigbee2mqtt était defectueux.

Apres reboot, Gladys ne voulait plus se lancer non plus.

Apres avoir trifouillé, c’est docker qui veut plus se lancer.

Je crois que j’ai gagné la palme ![]()

Bonjour,

depuis la mise à jour de Gladys mon matériel Zigbee ne fonctionne plus.

J’ai tout redémarré, toujours pareil et portant rien n’indique qu’il y a un problème avec MQTT et Zigbee2MQTT.

La meme de mon côté

#

# Fatal error in , line 0

# unreachable code

#

#

#

#FailureMessage Object: 0x7ed5664c

Using '/app/data' as data directory

#

# Fatal error in , line 0

# unreachable code

#

#

#

#FailureMessage Object: 0x7ef6a64c

Merci javais pas fais attention avec la solutions tout est good ![]()

C’est le pull qui corrige le problème ? Vous êtes sous Buster ?

Quest ce qui dit celui la ?

Si tu cliques sur le lien z2m @VonOx tu aurais vu que la solution etait d’installer la lib libseccomp2 pour buster ![]()

Pas de pull juste apt install ![]()

J’essayais de comprendre pourquoi on avait pas de soucis sur la dernière image gladys raspberry (bullseye)

Ce que je comprends pas trop c’est pourquoi c’est nécessaire sous Buster (ça ne l’était pas avant)

Merci pour le retour

Aucun soucis je charie, mais je pense qu’ils ont fait une maj (jai remarqué 1 release tout les 1er du mois)

Apres a quoi çà sert ^^

Peut etre un poisson ![]()

![]()

Problème résolu avec la solution